“Sacrifice?”

“Out!” shouts someone at a table to vehement nods.

“Generosity?”

“In!” another table cheerfully declares.

Humane metrics

In ways we couldn’t have anticipated, a workshop collaboratively hosted earlier this year by the Obermann Center, the Vice President for Research, International Programs, and the College of Liberal Arts and Sciences was valuable preparation for a campus engaging in strategic planning during a pandemic. Together, these campus offices invited a broadly representative group of faculty, staff, and graduate students from three colleges, campus libraries, and museums to participate in the February 21 Humane Metrics Initiative for the Humanities and Social Sciences (HuMetricsHSS) workshop. The project is funded by the Andrew W. Mellon Foundation and led by a team of eight visionaries from the academic, commercial, and nonprofit sectors.

The daylong discussion at the IMU ranged widely and was charged by a spirit of inquiry. Thirty participants explored two key questions: What are our most closely held values at the UI? How do our policies and practices express those values? Throughout the day, the workshop leaders’ prompts nudged the conversation from abstract principles to concrete practices. What criteria drives the assessment of academic work when tenure and salary review committees meet each year? What must work do to be considered excellent? Follow tradition? Be innovative? Can it live outside the lines of disciplines? Cross disciplines? Must it be single-authored? Collaborative? Topical? Ethical? Or are we assessing art and scholarship via surface metrics like grant dollars awarded, the status of publishing venues, the number of citations, or Twitter mentions?

One of the challenges we face in evaluation is that quantitative metrics neglect many of the values central to the arts, humanities, and social sciences, like the hard work required for collaboration, inclusivity, and contributing to the public good. Nor do the usual metrics capture the multiple dimensions of even the most conventional scholarly practice, including the important, time-consuming work of peer review, syllabus development, conference organizing, and intellectual leadership. When we ignore these crucial aspects of the conditions on which excellence is founded—even penalizing faculty who devote time to the good of the whole—the academy pays a heavy price. We risk losing talented, publicly engaged educators and leaders—especially faculty from underrepresented groups—at an alarming rate.

Putting values into practice

Enter HuMetrics. In their workshops at universities, colleges, and professional organizations around the country, the team’s goal is to empower people at all levels of an institution by helping organizations identify their core values and align reward mechanisms with those values—in every domain from grades and funding to promotion and tenure.

“We have to be intentional in everything we do about putting our values into practice,” says Christopher Long, Michigan State University Dean of the College of Arts and Letters, philosophy and classics professor, editor of Public Philosophy Journal, and one of the project’s founding members. “That is the indicator of quality—the degree to which we put our values into practice.”

Obermann Center Director Teresa Mangum first learned about HuMetrics through the Mellon-funded Humanities Without Walls program, in which the Obermann Center is one of 16 institutional partners. Knowing that HuMetrics planned to conduct intensive workshops at select colleges and universities across the U.S., she reached out to the team to ask if they might come to Iowa.

“Discussions about evaluation usually start with lists,” says Mangum. “As in, list what you’ve done in three areas—teaching, research, and service. But the HuMetrics group asks much more provocative questions: What are an institution’s values? How is your work supporting those values? It was bracing to be reminded that teaching, research, and service are not end goals in and of themselves, but rather means to the far more ambitious and inspiring goal of living your collectively agreed upon institutional values.”

Candid conversation

HuMetrics members Christopher Long; Nicky Agate, then Assistant Director of Scholarly Communication and Digital Projects at Columbia University and now Assistant University Librarian for Research Data and Digital Scholarship at Penn; Jason Rhody, Program Director of the Social Science Research Council’s Digital Culture Program; and Penny Weber, Projects Coordinator for the Social Science Research Council’s Digital Culture Program began the workshop with a candid conversation about the values that inspire scholars rather than the things the academy generally counts and tracks. “It’s a crucial discussion,” says Long, who begins every HuMetrics workshop this way. “It reminds participants why they got into higher ed in the first place.”

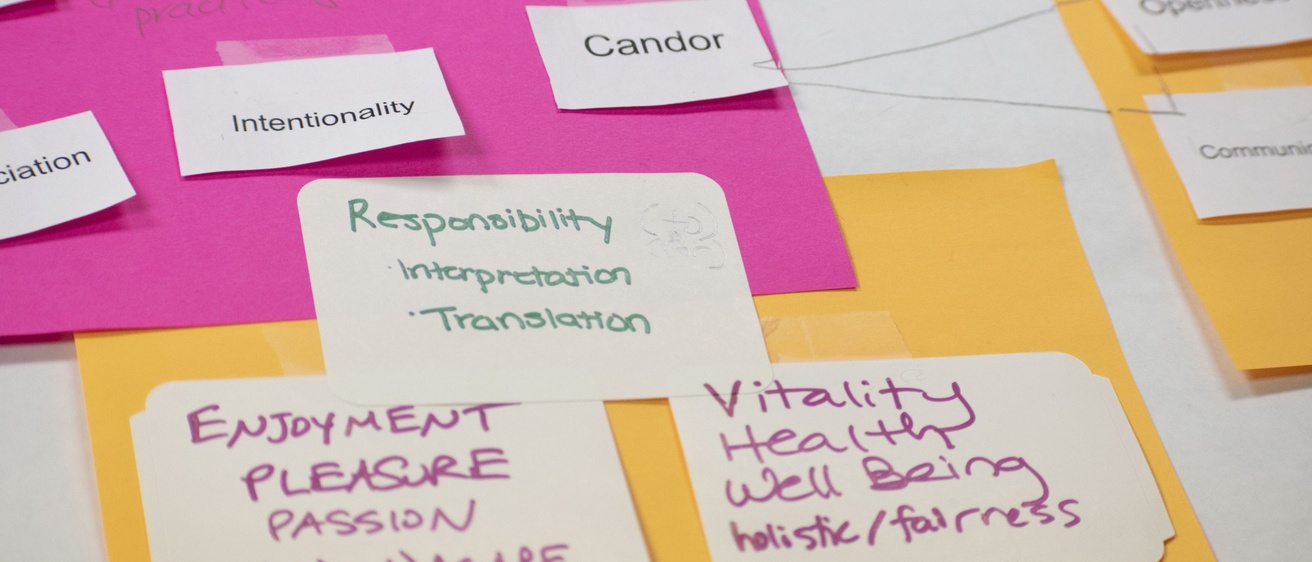

Participants then broke into small groups to create their own values frameworks. Each table held a stack of cards with one value printed on each card, plus a few blank cards for additional values. Together, group members considered what values motivated their work and attracted them to careers in higher education--values like depth, breadth, equity, well-being, intentionality, self-care, empathy, reciprocity, transparency, humility, inclusivity, public good, reproducibility, risk-taking, learning from failure, originality, imagination, and accountability. In lively debates, groups negotiated their accepted values. Once they decided which values to keep, each group organized those values into categories, ranked the categories by importance, and then reflected on the ways in which those values currently play out at the UI and in the scholars’ own professional lives.

No two frameworks were identical—but that was perfectly fine. In fact, says Long, trying to build consensus would have missed the point: “There is no universal framework. The goal of these workshops is simply to engage scholars and administrators in the process of tearing apart, interrogating, and rebuilding a values framework.” His colleague Nicky Agate further explains: “The idea is that an individual scholar would be able to talk about the intentionality and values behind their work in conversations with colleagues and administrators, but—crucially—also that a values-based framework would already have been established institutionally as something of value.”

Participants in the UI workshop then brainstormed alternative indicators—both quantitative and qualitative—for the evaluation of the many forms that contributions to their fields could take. For the humanities and social sciences, this included finding ways to value the process of conducting scholarship—when so much of the learning and discovery takes place and which often includes teaching—as well as the final product. What if, for instance, scholars were rewarded for publishing their failures? What if, instead of counting citations that appeared only in articles and books, review committees counted citations in syllabi, which potentially reach a much wider and more diverse audience than scholarly publications generally do?

How we got here and what's at stake

Why do so many institutions use blunt, surface metrics to evaluate the complex, dynamic processes of research, teaching, and learning? Where are the qualitative measures? Long traces this emphasis on “counting” to the Second Industrial Revolution, when the U.S. economy, including the education sector, began quantifying its impact in whatever ways they could, from the number of widgets produced to student credit hours to citation numbers. Metrics have remained this way because universities have felt forced to quantify their successes in a data-driven era of financial austerity.

Today, most institutions’ systems of reward, promotion, and tenure fail to credit the time-consuming yet largely invisible work that makes or breaks a university’s ability to recruit and retain talented students, faculty, and staff. For example, the quality of a faculty member’s contributions to committee work and mentoring is far more important to success than the number of committees one serves on. Similarly, we all recognize the qualitative difference between the wisdom required for inspiring, creative, informed intellectual leadership and what one might call a “middle management” mindset.

Consider the work of department chairs. A compassionate, visionary department chair often chooses to sacrifice her research to help her faculty and students thrive—taking the extra time required to nominate faculty and staff for awards, to write grants to enrich the major, etc. The department and university benefit enormously because she her values require that as a leader she should prioritize collaborative versus personal success. But how do we reward the quality of her performance, compared with that of another chair who does the minimum required and not even especially well? Focusing solely on conventional metrics de-incentivizes this essential work, endangering, in Rhody’s words, “the health of the academic ecosystem.” And because this labor is disproportionally shouldered by women and people of color, review committees end up promoting fewer of these faculty members—many of whom are outstanding leaders—to associate or to full professor. This harms those individuals and ultimately weakens the institution.

Discouraging innovation

Increasingly, arguments are surfacing that the overemphasis on the publication of single-authored works has had the unintended effect of discouraging untenured faculty from innovative, cutting-edge, cross-disciplinary, and collaborative work. If a department doesn’t clearly value risk-taking, young scholars quite reasonably avoid making waves in an ultra-competitive job market. Scholars whose values and intellectual interests lead them to fields like digital scholarship; public and/or community engaged research; interdisciplinary and collaborative research; and scholarship dedicated to advancing diversity, equity, inclusion can also find themselves at odds with conventional metrics. Success in these emerging fields might take forms we don’t usually count—a digital project built by faculty, librarians, and students; a community project that produces a food pantry and a white paper on food scarcity; an exhibition that mines community archives to write immigrants back into state or national histories. Exacerbating the problem, their “undisciplined” work might be perfect for new interdisciplinary journals that lack the immediate name recognition and credibility of disciplinary flagship publications.

Time and again, the HuMetrics team has heard untenured scholars in the humanities and social sciences lament that they need to “save” the work they believe would speak to their own and institutional values with greatest impact until after they achieve tenure. That unfortunate reality can lead to failure: failure to produce exciting, field-changing knowledge; failure for scholars to pursue their most fulfilling work; failure to attract students who want to work with instructors passionately engaged in innovative research; and, ironically, failure for the university to live out its own values of fostering discovery and contributing to the public good.

A toxic culture

What’s worse, says the team, is that superficial evaluation metrics foster a toxic culture of competition that permeates the academy, leading to everything from unethical scholarly practices like “citation cartels” (in which groups of authors and journal editors cite each other to artificially inflate their articles’ metrics) to criminal abuses of power. Universities without clear, collectively determined values can make terrible mistakes.

Long experienced this firsthand, witnessing two of the biggest sexual abuse scandals in the history of higher education—first when he was Associate Dean of the College of the Liberal Arts at Penn State and later as Dean of the College of Arts and Letters at Michigan State. “Those incidents [which occurred in athletics] may seem separate from academic work,” he says, “but they’re symptoms of a deeper cultural problem in higher education associated with the abuse of power. The ways in which we faculty interact with our graduate students, undergraduate students, staff, and each other is infused by a culture of competition and self-interest. That toxic culture has to change.”

The future of higher education depends on it. Many of today’s students and early career scholars want to put their values into practice in the “real world,” and they need role models who empower them to do that. In Long’s words, “we realize we have to embody these values of diversity, equity, inclusion, reciprocity with communities, humble participatory research engagement, and other modes of interacting that have a deep human dimension that no AI or other technologically designed entities can do, partly because we need to thrive in the future, but partly because we need to hold those technological advancements accountable.” Regularly revisiting our institutional values and setting time aside to discuss how our policies and practices support those values are the best possible preparation for a volatile, uncertain, and dynamic future.

Moreover, the goal of the HuMetrics team is to include the entire campus in defining and supporting the mission of their university. The team emphasizes that the values-based framework process is not just for faculty but for everyone—and Long has seen strides in morale, community, and equity among MSU staff, who feel empowered by being included in the conversation about recognizing all different kinds of labor. One huge benefit of acknowledging and valuing labor (not just as product), says Long, is that “You start to see how many hands are involved in the work that we do in the academy, from graduate student to senior faculty member to librarian to custodian. Everything we do is collaborative.”

How we fix it

HuMetrics’ approach is to shift the “three legs of the tenure stool” from teaching, research, and service to broader questions that reflect specific values of humanities and social science scholars—and of higher education more generally. Ideally, says Weber, institutions would be able to clarify and illustrate their institutional values and to ask faculty and staff to discuss how they can use their talents and training to support those values. Moreover, institutions would start those conversations in the hiring process. This forthright, consistent approach would clarify for everyone what matters and why during moments of evaluation. The tenure and promotion process would leave room for variation, as long as an individual or group’s work contributed to and supported the shared values of a department or unit. Faculty and staff would set clear goals with their supervisors using agreed upon institutional values as their lodestar. When the next year’s review committee met, they then might ask not “How many publications do you have?” but “How have you shared knowledge, expanded opportunity, and stewarded resources? What does the arc of your career look like over the next five years? How can we articulate indicators that you’re on your way there?”

Right now, the team is conducting workshops at Big Ten universities, as well as smaller colleges, nonprofits, and professional organizations, many of which, they say, were already having conversations about evaluation practices. “We’re there to enrich, to broaden the conversations that are already happening,” notes Rhody. “We don’t intervene in the process but rather help people have these conversations on their own campuses—to inspire them to push back and come up with alternative evaluation processes and metrics.”

Their goal is ambitious but achievable. The team says they’re inspired by a growing sense of possibility: “Every workshop we have, every conversation we have with a scholar, every time we empower someone to think about their values and how they’re putting them into practice—that elevates their expectations of their institution and begins to push this change forward,” says Long.

Ambitious goals

The group’s longer term “to do” list for higher education is ambitious:

- Clear up confusion about who determines promotion and tenure policies and outcomes

- Provide faculty and administrators tools to put values-based assessment infrastructure in place and develop new metrics that balance our attention to quantity and quality

- Dismantle the pervasive “culture of fear”—especially the fear of losing prestige and national status—which drives us from qualitative evaluation back to statistical measures. That fear can prevent faculty and administrators from pushing boundaries and moving outside of their usual assessment methods

“Everyone we’ve talked to on our site visits thinks that the current measures and metrics are inadequate, toxic, or biased,” says Weber, “but thinks that there is some other governing body that determines those metrics—whether it’s a chair, dean, or provost—when in fact, anyone, at any level, can change the process. So, part of our task is just information sharing,” she says, letting people at all levels know that there’s widespread dissatisfaction and that they have the agency to change it.

In addition to conducting workshops, the HuMetrics team plans to make their documents and processes available in an open-access format. They are creating a toolkit for that includes a guide faculty and administrators can use to run their own HuMetrics-style workshops. In addition, the team is creating digital tools that will help review committees measure currently overlooked indicators, such as how scholarly works are being incorporated in syllabi.

What role can HuMetrics play at the University of Iowa?

The faculty, staff, and graduate students who participated in the February workshop ended the long day with a great deal of hope for the future. “Collectively determining our values here at the UI and beginning to imagine how those values might transform the ways we work and evaluate our work was exhilarating,” recalls Mangum.

Participants came to the workshop because they believe the current system of evaluation is wanting. Writes Christopher Long, “The metrics used to identify excellence, and on which current tenure and promotion decisions are based, have become a barrier to more exciting and innovative scholarship.” He and his team argue that the metrics are not nuanced enough “to do justice to the most exciting and transformative scholarship of which our most talented faculty are capable. . . .By broadening the scope of what we understand as a contribution to scholarship, we will be better positioned to empower faculty to identify indicators of excellence along more diverse pathways of intellectual leadership.”

Mangum summed up the experience this way: “Among the innumerable discoveries I’ve made through my work at the Obermann Center, nothing is clearer than the widespread desire for a career dedicated to meaningful work rooted in shared values. When students and faculty members can agree upon a mission based on intellectual and cultural values, their inspiration and contributions soar. The rhetoric of ‘productivity’ makes many of us feel like cogs in a machine. The language of values is closer to the reality that we chose to be artists, scholars, researchers, and teachers because we wanted a vocation, not just a job. The pandemic has brought home to many of us that we may be able to find ways to do our work that satisfy our love of learning while also contributing to the public good.”

Join the conversation

- HuMetrics @HuMetricsHSS

- Christopher Long @cplong

- Nicky Agate @terrainsvagues

- Jason Rhody @jasonrhody

- #HuMetricsHSS